Create Periodic Tasks with Django and Celery

Django is a high-level Python web framework that encourages rapid development and clean, pragmatic design. Built by experienced developers, it takes care of much of the hassle of web development, so you can focus on writing your app without needing to reinvent the wheel. It’s free and open source.

Celery is a simple, flexible, and reliable distributed system to process vast amounts of messages, while providing operations with the tools required to maintain such a system.

I adore this duo because it is very easy for you, and very simple to debug.

We won’t go into depth about how to install Django, let’s just talk about how to connect them (Django+Celery).

First you need to install Celery with a Broker of your choice (Redis, RabbitMQ, Amazon SQS). In this tutorial we will talk about Redis.

Redis, The open source, in-memory data store used by millions of developers as a database, cache, streaming engine, and message broker.

- Install Redis:

No need for long explanation, install it depends on your OS:

https://redis.io/docs/getting-started/

- Install Celery:

As we mentioned, because we are using Redis, we can directly install both Redis and Celery with this command:pip install -U "celery[redis]"

more details here:

https://docs.celeryq.dev/en/stable/getting-started/backends-and-brokers/redis.html#broker-redis

Or with two commands:pip install celery

pip install redis

- Celery configuration:

First inside your Django project (beside settings.py file), create a file called:

celery.py

and put this code in

import osfrom celery import Celery# Set the default Django settings module for the 'celery' program.os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'src.settings')app = Celery('src', broker='redis://localhost')# Using a string here means the worker doesn't have to serialize# the configuration object to child processes.# - namespace='CELERY' means all celery-related configuration keys# should have a `CELERY_` prefix.app.config_from_object('django.conf:settings', namespace='CELERY')# Load task modules from all registered Django apps.app.autodiscover_tasks()@app.task(bind=True)def debug_task(self): print(f'Request: {self.request!r}')

Then in __init__.py file, enter this code:

# This will make sure the app is always imported when

# Django starts so that shared_task will use this app.

from .celery import app as celery_app

__all__ = (‘celery_app’,)

Note: Change “src” with your project name

For more details about configurations, take a tour here:

https://docs.celeryq.dev/en/stable/django/first-steps-with-django.html

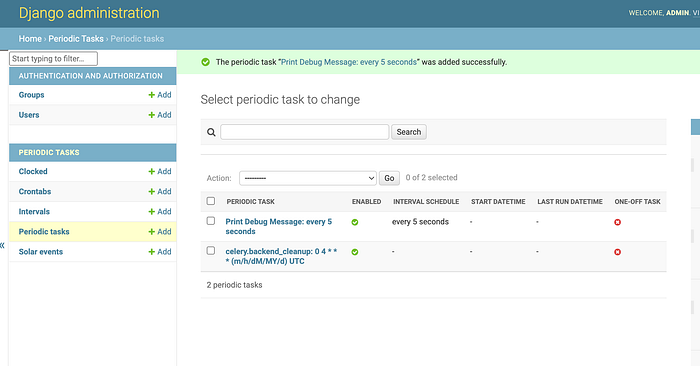

- Control Tasks from Admin Panel:

Custom scheduler classes can be specified on the command-line (the — scheduler argument).

The default scheduler is the celery.beat.PersistentScheduler, that simply keeps track of the last run times in a local shelve database file.

There’s also the django-celery-beat extension that stores the schedule in the Django database, and presents a convenient admin interface to manage periodic tasks at runtime.

- Install

django-celery-beat

pip install django-celery-beat2. Add the django_celery_beat module to INSTALLED_APPS in your Django project’ settings.py:

INSTALLED_APPS = (

...,

'django_celery_beat',

)3. Apply Django database migrations so that the necessary tables are created:

python manage.py migrate

4. Access to your admin dashboard and start creating your periodic tasks there.

- Start Services:

Now run bot this commands and enjoy:

celery -A src beat -l INFO —-scheduler django_celery_beat.schedulers:DatabaseScheduler

celery -A src worker -l INFO

Note: Change “src” with your project name